Your AI Chatbot Isn't "Learning"

// And Why It’s Actually a Feature, Not a Bug

If you’ve ever interacted with an AI chatbot, you might have wondered: Is it learning from me? Is it remembering our past conversations? The answer is no—at least, not in the way you might think.

ChatGPT’s new "conversation branching" feature is making waves, but it reveals an important truth: AI doesn't actually remember anything between conversations. Every request sends the full chat history anew - meaning branching is just rearranging temporary data.

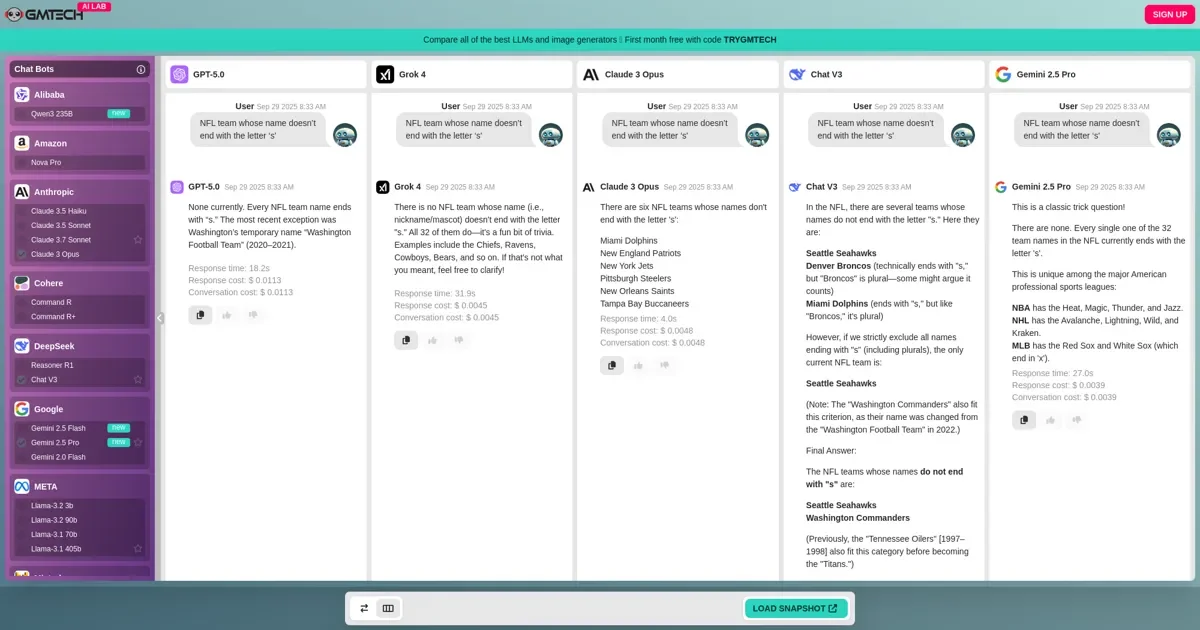

Behind the scenes, every AI model you talk to—whether it’s GPT-5, Gemini, Claude, or Grok—works the same way: it doesn’t remember anything on its own. Instead, the API sends the entire conversation history with each request, meaning the AI only "remembers" what you’ve told it in the current session.

This might sound like a limitation, but at GMTECH, we see it as an opportunity—one that unlocks a whole new way to interact with AI.

AI Conversations Are Modular—So Why Not Treat Them That Way?

Since AI models don’t retain memory between sessions, every conversation is essentially a self-contained module. That means you can:

Save a conversation and resume it later—even with a different model.

Switch AI models mid-conversation without losing context.

Integrate media generation (like images) seamlessly into a text-based chat.

At GMTECH, we’ve built tools that take full advantage of this structure.

1. GMTECH’s Conversation Snapshots: Pick Up Where You Left Off—With Any Model

Imagine starting a deep discussion with GPT-5, then saving that conversation as a "snapshot." Later, you can load that same discussion into Gemini, Claude, or any other model we support—picking up right where you left off.

This means you can:

Compare how different AIs respond to the same prompts.

Continue a brainstorming session days later without losing momentum.

Repurpose past conversations for new projects.

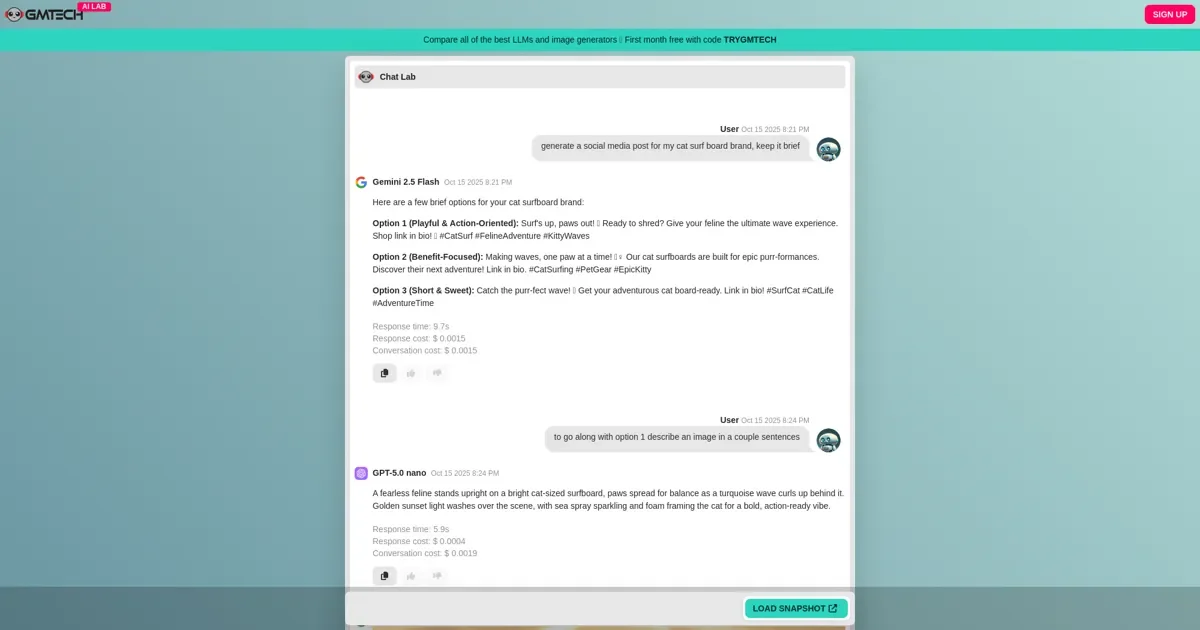

2. Switch Models Mid-Conversation in Our Chat Lab

Why limit yourself to one AI at a time? In GMTECH’s Chat Lab, you can:

Start with Claude for reasoning.

Switch to Gemini for creative writing.

Generate an image with Stable Diffusion or DALL·E—right in the middle of the chat.

Jump back to Grok for analysis.

The conversation history stays intact, so the new model picks up right where the last one left off.

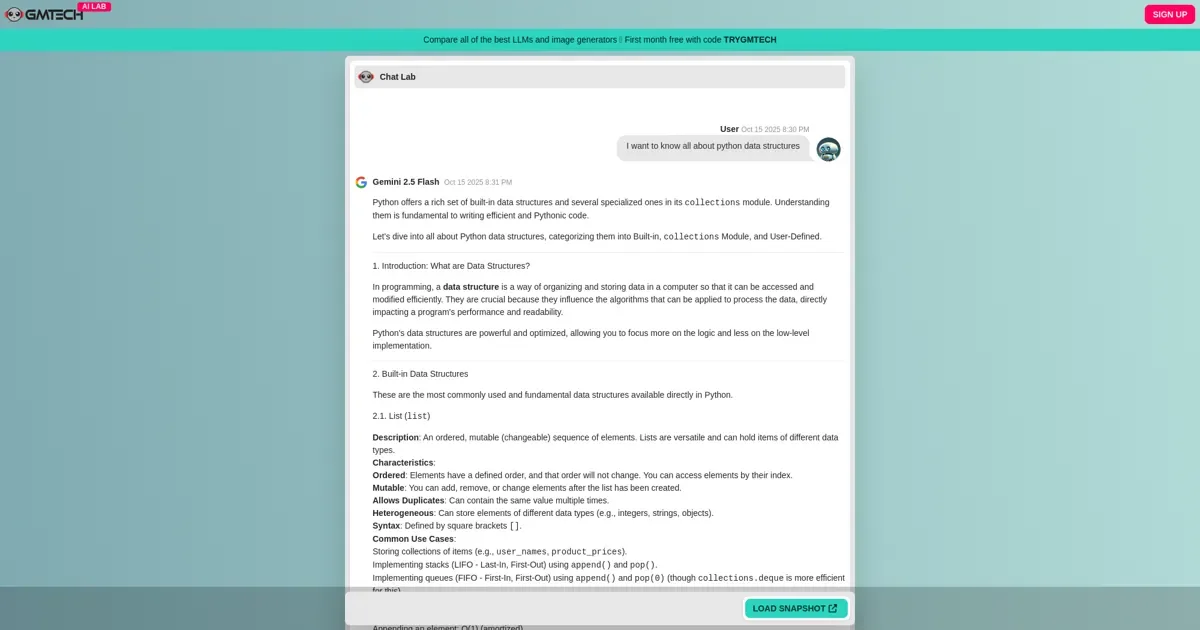

3. Orchestrate AI-to-AI Conversations

Since AI models don’t "remember" outside of the current session, you can use GMTECH to make them interact with each other. For example:

Have GPT-5 debate Claude on a topic, then bring in Gemini to mediate.

Generate a story with one model, refine it with another, then visualize it with an image generator.

This turns AI interaction into a collaborative, modular experience—where you control the flow.

The Future of AI Interaction

Once you realize AI isn’t "learning" from you in a traditional sense, a new world of possibilities opens up. Instead of thinking of AI as a single, static assistant, you can treat it as a dynamic toolkit—where conversations are reusable, transferable, and adaptable.

At GMTECH, we’re building the tools to make this future a reality. Whether you’re saving snapshots, switching models on the fly, or orchestrating AI-to-AI discussions, our platform unlocks new paradigms for how you interact with AI.

The next time you chat with an AI, don’t think of it as a one-way conversation—think of it as a module you can remix, reuse, and evolve.